Real-Time Data.

Real-Time Decisions.

Change Data Capture for Data Lakes with Near Real-Time Data Sync and Orchestration

Say goodbye to batch-based ETL. Stream changes as they happen. Keep your data lake always fresh and analytics-ready.

What can you do with Tessell CDC for Data Lakes:

Real-Time Replication

Continuous ML training with Fresh Operational Data

Seamless Sync

Unified Lakehouse in OneLake

Support for all Clouds and all databases - AWS, Azure, GCP

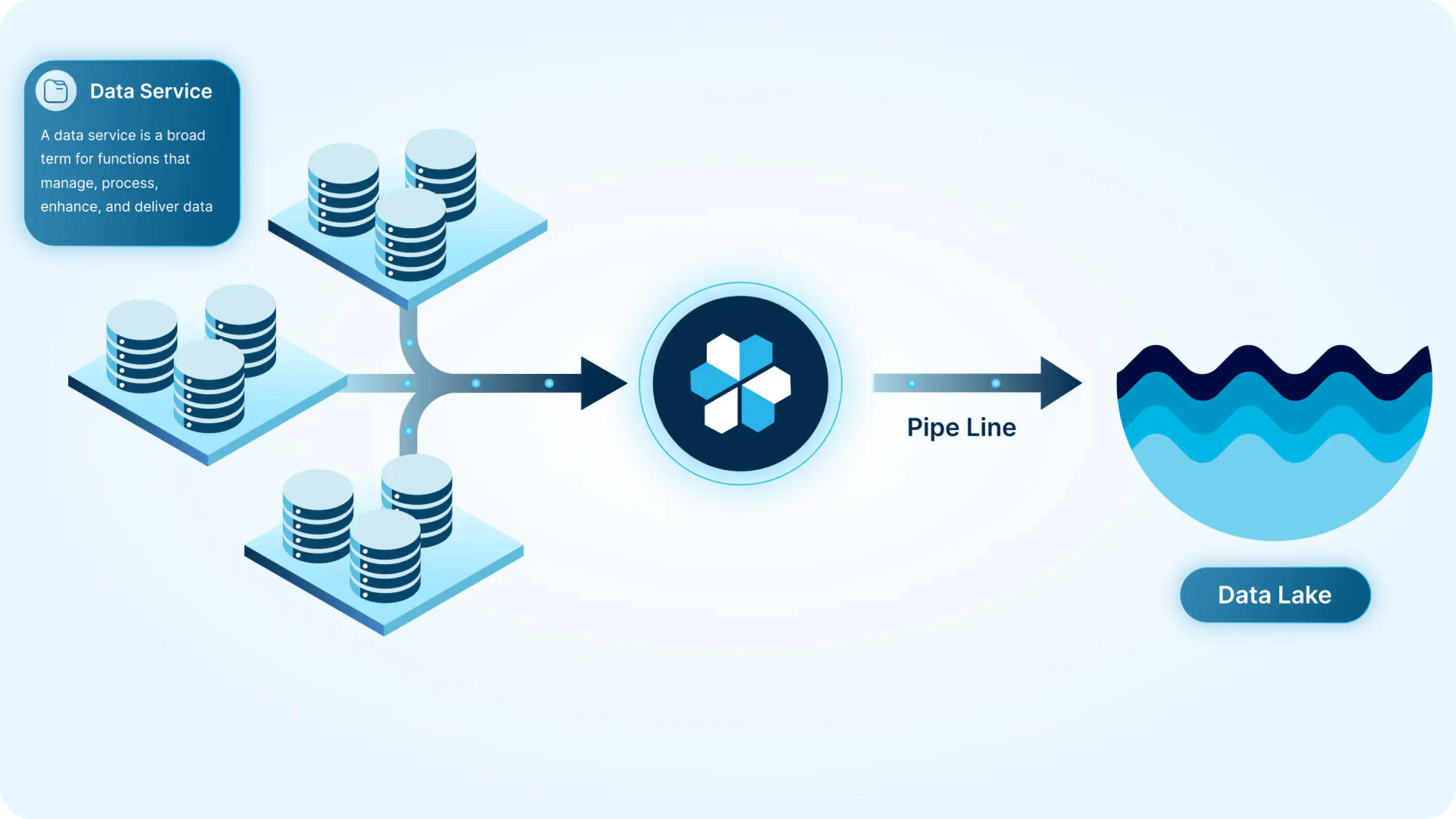

The Bridge Between Operational & Analytical Data

Tessell unifies your operational data (Oracle, PostgreSQL, SQL Server, and more running in the cloud) with your analytical estate (data lakes, warehouses, and lakehouses) through a single control plane that’s built for DBAs, cloud architects, and data teams.

Enabling secure, near real-time pipelines directly into OneLake

Native Fabric Connectors - Direct integration with OneLake, Synapse, and Power BI without custom ETL.

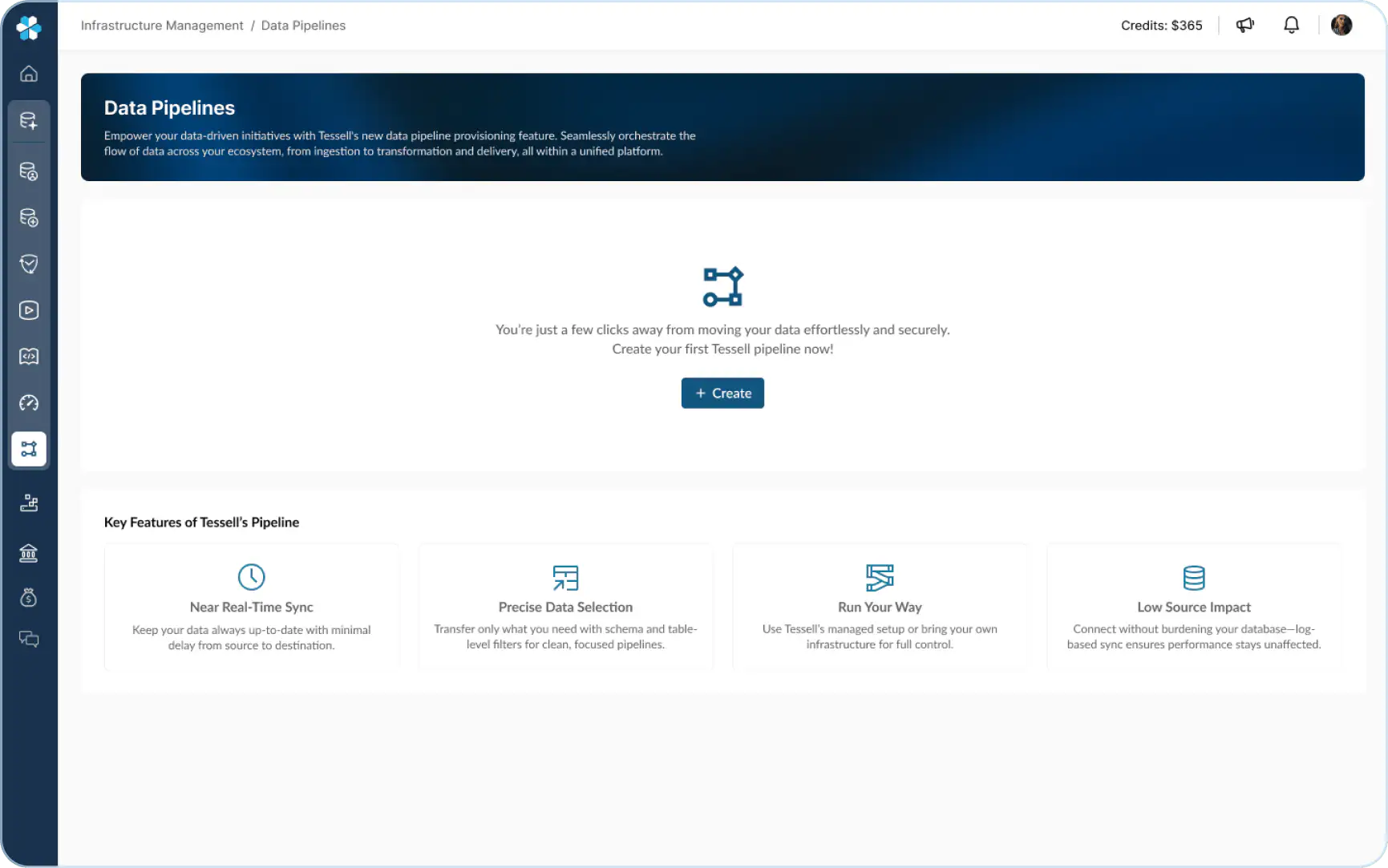

Steps to Create Your Own Data Ecosystem

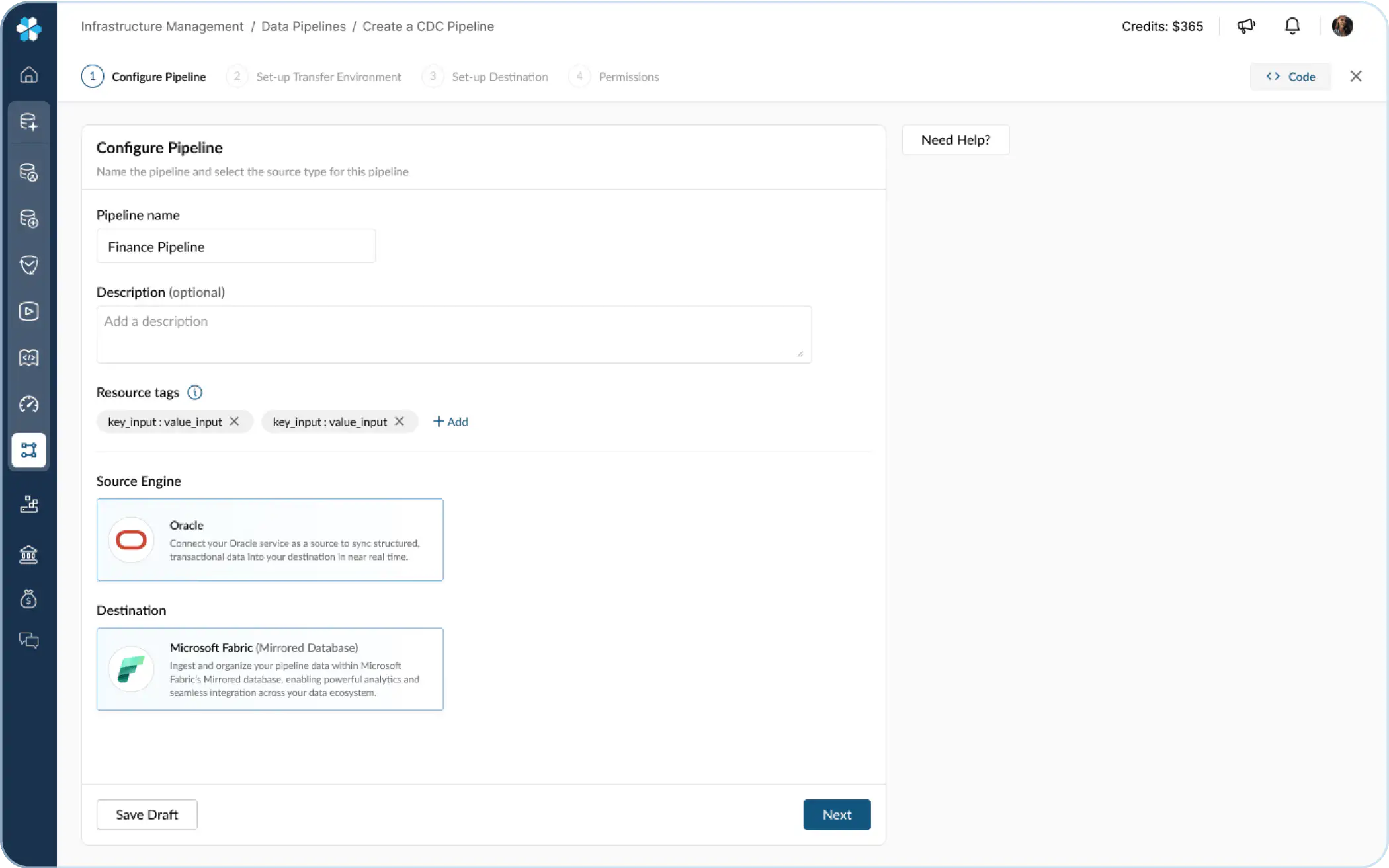

Define Engine type and select the destination end point

Setup infra for continuous streaming

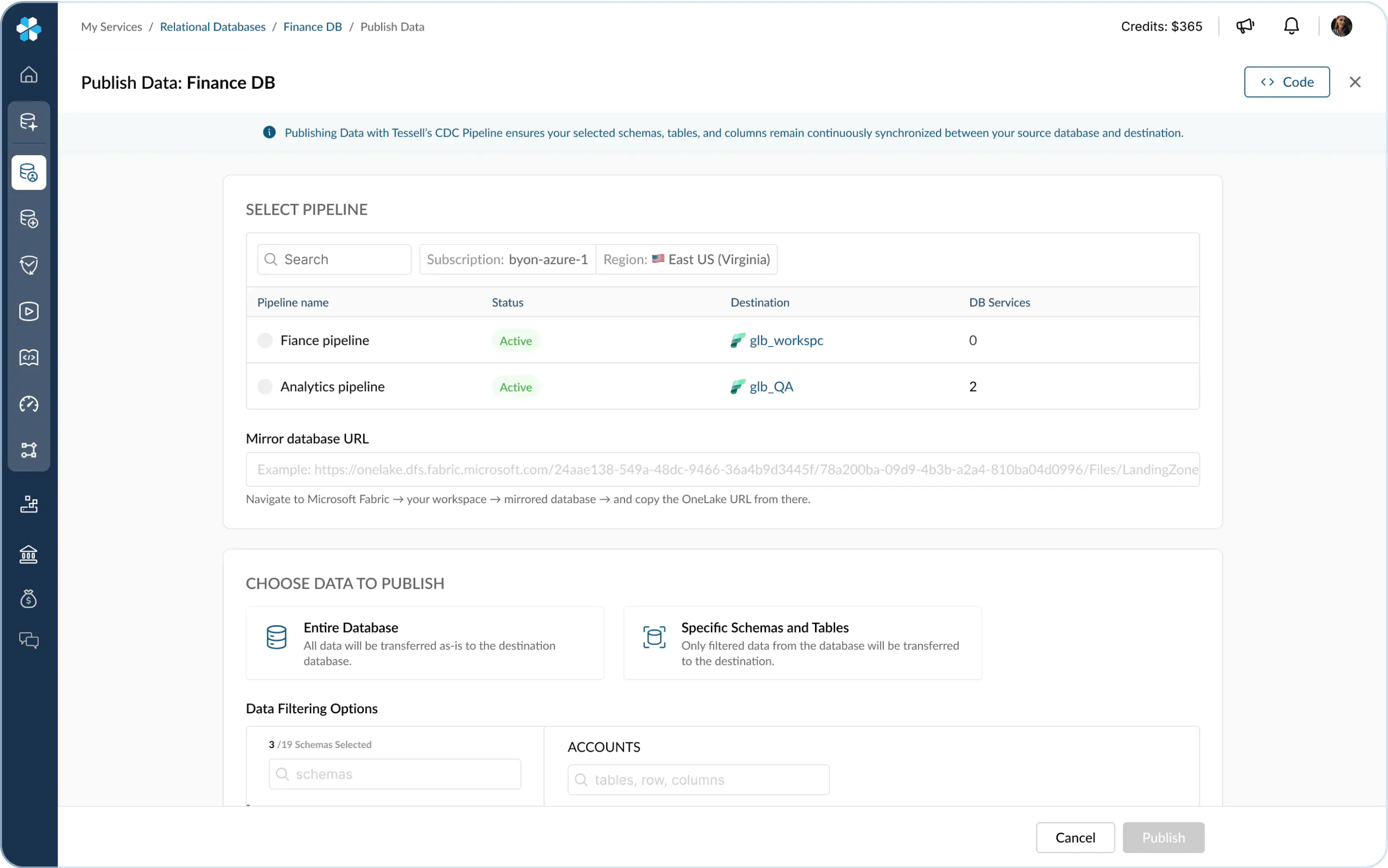

Customize the data that needs to be synced

Bootstrap/One time full sync of database

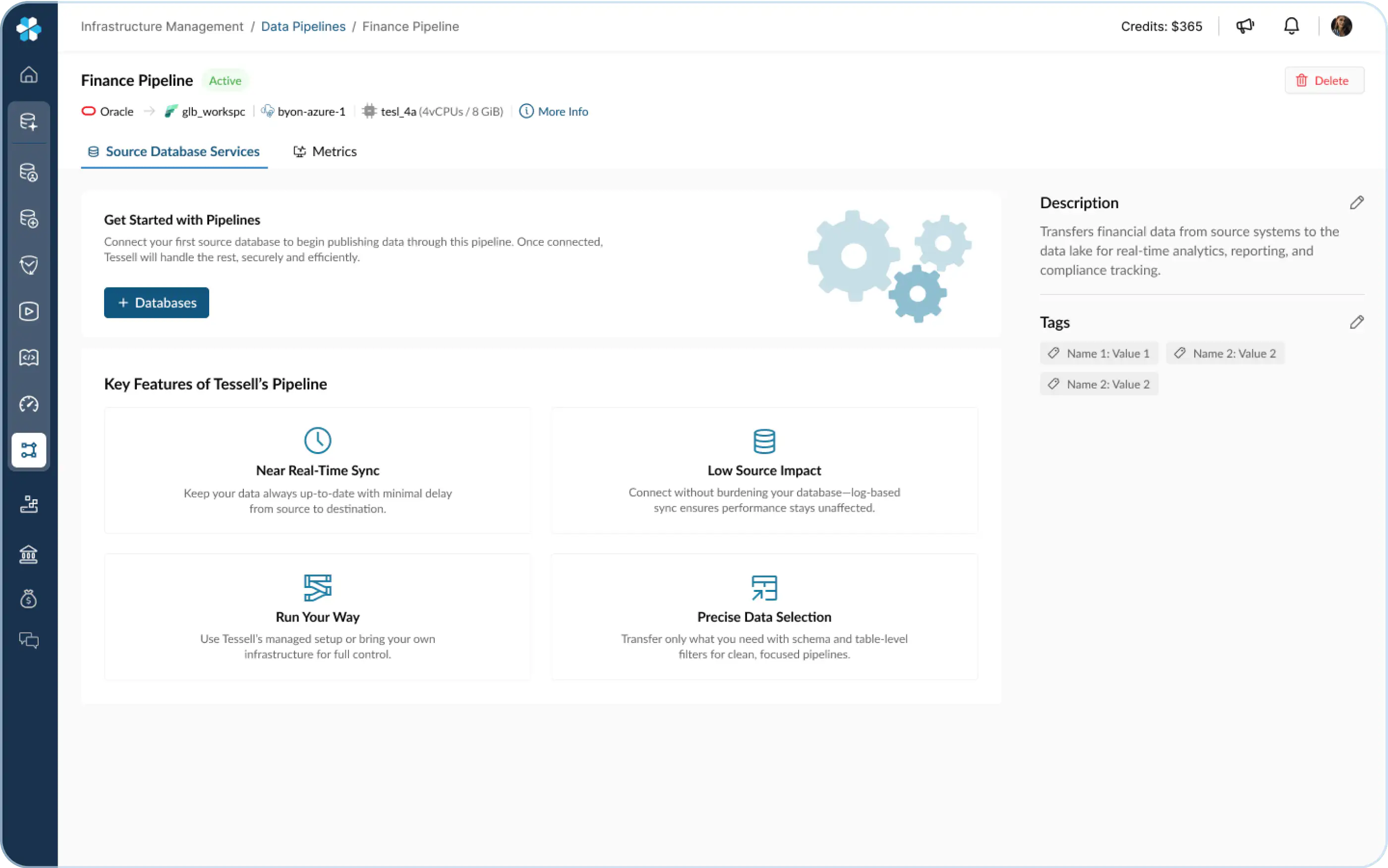

Sync data continuously and monitor data

How It Works

Capture

Non-intrusive ingestion from production databases (streaming + micro-batch), honoring source performance and security policies.

Curate

Validate, reconcile, and standardize; manage schema evolution safely with versioned contracts.

Deliver

Land clean, partitioned, analytics-ready data to lakes/warehouses; auto-manage tables, manifests, and formats.

Govern & Observe

Lineage, audits, data quality scores, SLA tracking, and alerting across sources, pipelines, and destinations.

Outcomes

Faster time-to-insight with reliable, incremental feeds from operational systems to your lakehouse.

Fewer data incidents thanks to proactive integrity/quality gates and transparent monitoring.

Lower TCO by consolidating tools, automating ops, and reducing failed/duplicative loads.

Powering Trustworthy Data Movement Across Your Ecosystem

Tessell ensures data flows with integrity, consistency, and observability across lakes, warehouses, and hybrid clouds.

Built for All Your Teams

Connect to What You Use